In this video blog, we are going to cover the High Availability in Kubernetes, Advantage of Kubernetes High Availability, Deployment, Load balancer, Service, and also we are discussing how to set up a highly scalable application Use-Case in Kubernetes.

High Availability In Kubernetes

If you have multiple applications running on Single containers that container can easily fail. Same as the virtual machines for high availability in Kubernetes we can run multiple replicas of containers. In Kubernetes, to manage the multiple replicas we use deployment this is a type of controller.

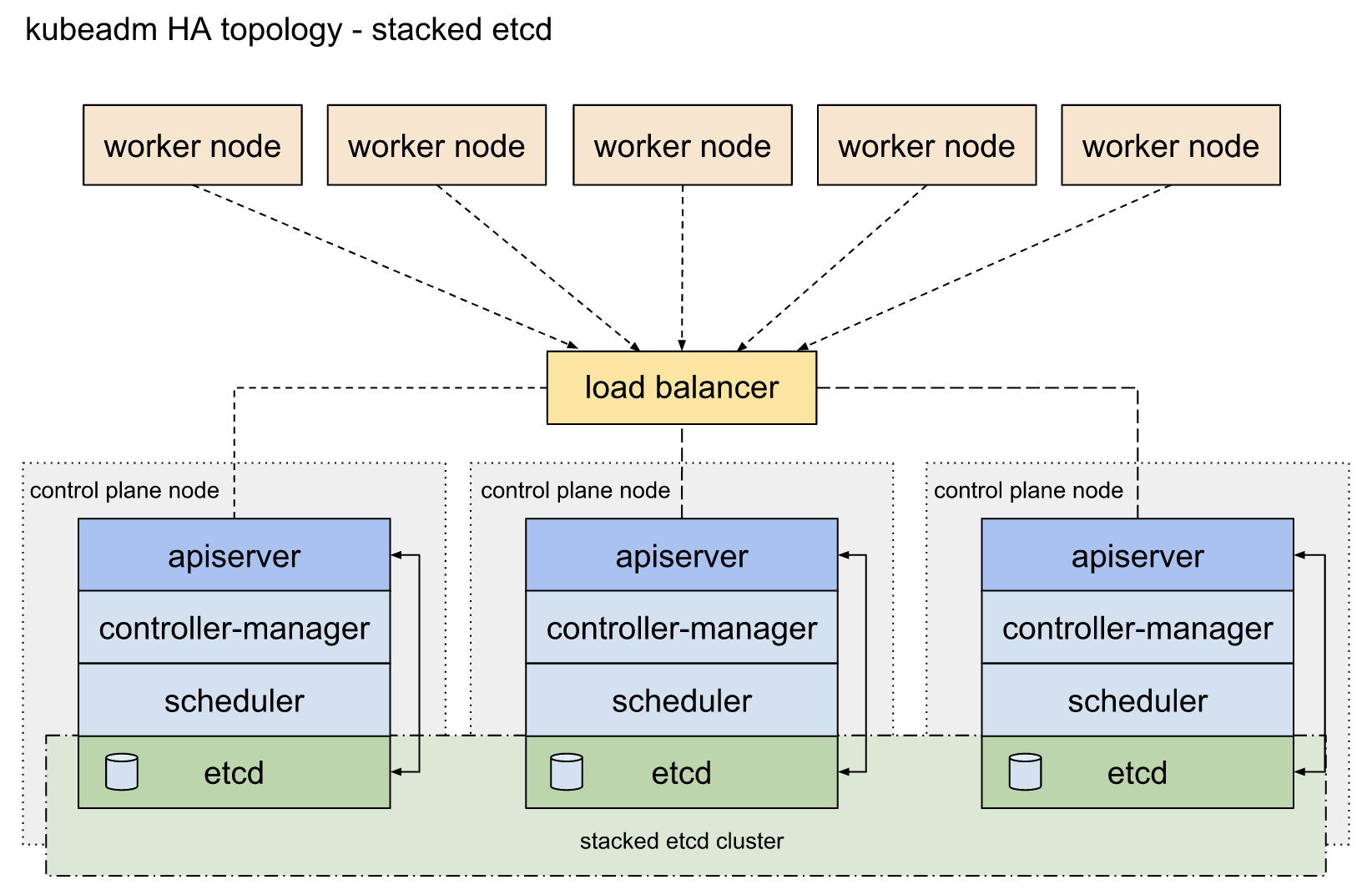

In Kubernetes HA environment, important components like API server, controller manager are replicated on multiple masters (usually two or more masters) and if any of the masters fail, the other masters keep the cluster up and running.

Also check: All you need to know on Kubernetes RBAC

Advantage of High Availability in Kubernetes

In Kubernetes, we have different master node components i.e Kube API-server, etcd, Kube-scheduler due to any failure if this single master node fails this cause a big impact on business. so to solve this issue we deploy multiple master nodes to provides high availability for a single cluster and improves performance.

Also Check: How does Kubernetes network policy work? Click here

Deployment

Deployment is a type of controller that is used to manage multiple replicas. By using the deployment we can Scale-up and Scale-down the replicas. also, we can define Deployments to create new ReplicaSets or to remove existing Deployments and adopt all their resources with new Deployments.

Services

Kubernetes services enable communication between various components within and outside of the application Kubernetes. Services help us connect applications together with other applications. Services provide a single IP address and DNS name by which pods can be accessed.

Check out: Docker Architecture | Docker Engine Components | Container Lifecycle.

Load balancing

Load balancing is efficient in distributing incoming network traffic across a group of backend servers. A load balancer is a device that distributes network or application traffic across a cluster of servers. The load balancer has a big role to achieve high availability and performance increase of cluster.

Check out: Difference between Docker vs VM

Setup Scalable Application Use-Case

In this HA cluster, on the node port, we are exposing the container and there is a mesh network inside, even your container is running in worker node one. If you reach on the worker node two your packet will be routed over there. It would get routed through the correct destination very early.

In Kubernetes, we can perform load balancing across containers the same as we perform in different virtual machines. In Kubernetes load balancing can happen if you are manually deleting a pod or a pod got deleted accidentally or restarted. The deployment will make sure that it brings back the pod because Kubernetes has a feature to auto-heal the pods.

If you are re-creating a new pod it will assign a new IP address to the pod. When it came with a new IP address still then the reachability to that particular pod has not changed because every time service IP address was constant and it sent back according to the service IP address and in the back end service keep monitoring rather the pod went up or went down, it maintains the IP address and the endpoint list.

Check Out: Our blog post on the Kubernetes cluster. step by step guide to set up a three-node Kubernetes cluster.